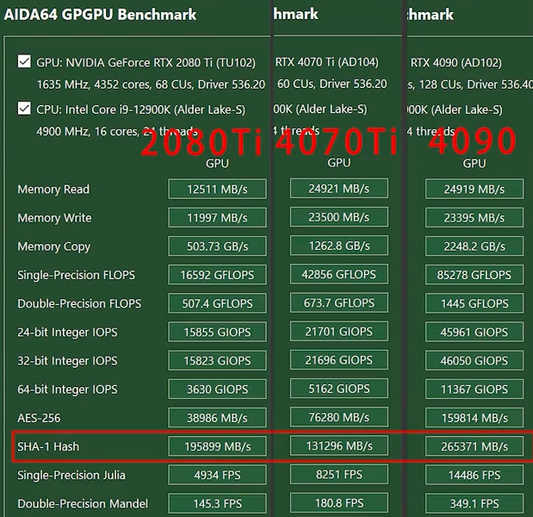

Many people wonder if the custom upgraded RTX 2080 TI is just a toy. I always tell them we've been using RTX 2080 TI 22G for production level computing clusters since 2021. These rigs drop our computing cost to 1/5 compared to renting AWS.

We recently purchased a new batch of the upgraded RTX 2080 Ti and several computing rigs. Because our customers cannot afford much, we have limited budgets. All components are used parts that we got from eBay etc.. Don't ask me why we don't buy new parts. Spending $$$ on brand new parts is not our goal.

Let me take you through the process of how to put together an 8 x RTX 2080Ti22G rig.

What we bought are stand alone components:

A supermicro 4029 chassis and mother board. 2 x 6162 Intel Gold. 8 x 32G DDR 4 2133 memory

First install CPU and memory

Next comes our GPU. We use 8 x ZOTAX 2080 Ti that we have upgraded to 22GB VRAM each.

We got a bunch of these:

Install these GPUs one by one

We choose Ubuntu 20.04 as the base system. Although it's less secure than centOS, it brings us higher compatibility with our DIY deep learning software.

Install Ubuntu. But do not install the graphics driver coming with Ubuntu.

After the system is initiated, run 'lspci | grep VGA'. You should see the 8 pieces of 2080ti being recognized by the sytem.

But since we haven't installed nvidia driver yet, nvidia-smi does not work yet.

Next go to Nvidia official website. Choose the 2080ti device and Linux system. Click search.

There is a a recently released version 550.54.14

Download the .run file. Do "chmod a+x". Run the .run file with sudo:

Choose the options suitable for you. In my case, I just kept clicking enter to use all the default options. After the installation, reboot your computer. After the screen turns on again, try "nvidia-smi". You will see 8 GPUs properly recognized with 22G VRAM displayed.

This is what my rig looks like:

At last, I want to share some of my computation with you: If you buy such a rig like me, you're gonna pay for $1000 for the used 4029 chassis, $500 for two 6162 Intel Gold, $800 for 256G DDR4 ram, $200 for 2T SSD drive, and $4000 for GPUs ($500 each). In total, you pay $6500 to get 22Gx8=176G GPU VRAM and 96 CPU cores. The inference speed is almost equivalent to A10 24G. On the other hand, if you rent such configurations (8 x A10 24G) from AWS, its only lasts you at most two months.

I sincerely invite you to try the upgraded RTX 2080Ti22G and do the math to see how much it saves you and your customer.