The original video is on Douyin by @GeekBuy: https://v.douyin.com/ikWUxNXN/

I translated his content for your information

We have the 4090, the 4080, the 4070 Ti, and the cheapest option, a modded 22GB 2080 Ti. Let’s set aside the actual value of these GPUs for a moment—these are all the latest consumer-grade cards, each with a minimum of 12GB of VRAM. As AI-generated content (AIGC) becomes as commonplace as Office, more and more people are using local GPT-like dialogue models, image generation models, and AI video rendering applications.

Although some image generation models require a minimum of 4GB of VRAM, dialogue models typically need 6GB. Larger VRAM not only speeds up image generation but also makes dialogue models smarter, resulting in better output. For example, using a 12GB vs. 24GB card to write a plan with AI will yield different results—lower VRAM models might even crash and start generating gibberish. If you’re aiming for high-efficiency local AI applications, I recommend using at least a 12GB graphics card. More VRAM typically equals better performance.

Aside from top-tier cards like the 4090 and 3090, which have over 20GB of VRAM, even a $2000 4080 has just 16GB. Tesla cards are out of the question for most users—they’re too expensive and difficult to deploy. However, consumer-grade equivalents of Tesla cards exist: the P100 matches the 1080, the V100 matches the 2080, and the A100 is like the 3090, while the H100 is comparable to the 4090. So, the modded 22GB 2080 Ti for just $500 is undoubtedly a solid choice. But how powerful is it?

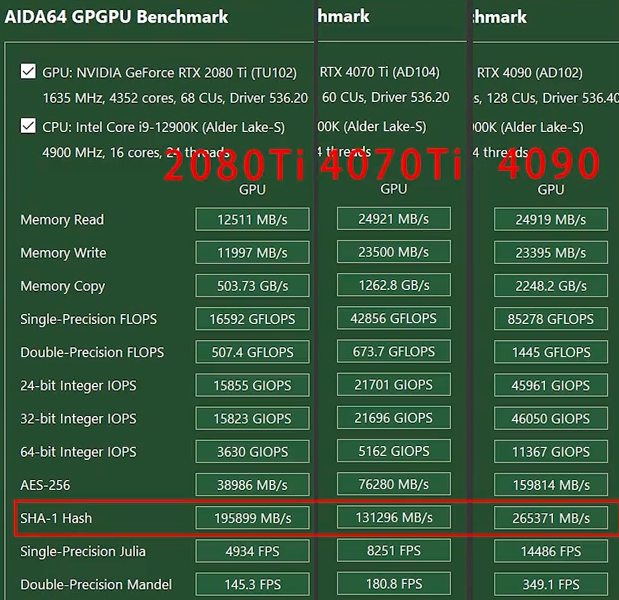

Let’s find out by running some benchmarks. We’ll compare the $500 2080 Ti with the 4070 Ti. We have two platforms for testing:

1. A Z790 chipset with an i9-12900K, 32GB DDR5 RAM, and 2TB SSD.

2. An ASUS TUF B660 with an i5-12490F, 64GB DDR4 RAM, and a Samsung 980 Pro.

Both setups should be free from bottlenecks. First, let’s run AIDA64 to measure the GPU performance. The results show that while the 2080 Ti leads in OpenCL-based workloads, it falls short in AI-related single-precision, double-precision, and INT8 tasks compared to the 4070 Ti and 4090. But at just $500, compared to nearly $950 for the 4070 Ti or $1800 for the 4090, there’s a noticeable price difference.

Next, we ran Stable Diffusion. We generated ten batches of two images each. The 2080 Ti was slightly faster than the 4070 Ti but about 30% slower than the 4090. As for VRAM usage, the 2080 Ti used significantly more VRAM, while the 4070 Ti and 4090 stayed below 25%.

Moving on to dialogue models, we tested both 6B and 15B models. The 4090 loaded models faster than the 2080 Ti, but after loading, the performance was nearly identical. For the 6B model (GLM1), VRAM usage exceeded 16GB at times, causing the 4080 to run out of VRAM. The optimized GLM2 stayed under 15GB, making it more manageable. However, in terms of dialogue speed, there was little difference between the 2080 Ti and the 4090.

For AI video rendering, we used the latest 3.3 version of Topaz Video AI to upscale a 1080p 30fps video to 4K 30fps. The 4090 completed the task in 5 minutes and 16 seconds, while the modded 2080 Ti finished in 5 minutes and 3 seconds—slightly faster, although the difference could be attributed to background processes. This proves that not all AI applications require the latest GPU for optimal performance.

In summary, for local AI applications, larger VRAM is essential. For GPT-based models, the 4090 outperforms the 3090 and the modded 2080 Ti, and it’s better than the 4080 due to VRAM limitations. While the 2080 Ti can outperform the 4070 Ti in image generation, its advantages are marginal compared to the 4090. In dialogue models, the 2080 Ti and 4090 performed similarly, making the $500 2080 Ti an excellent value. For those interested in AI video rendering, the modded 2080 Ti can still hold its own, proving that software optimization can sometimes outweigh hardware advantages.